AI-Powered Virtual Assistant: Huge Market Size From simple Voice Annotation

The AI-Powered Virtual Assistant Market Size is estimated to be at $3.442 Billion in 2019, and this number is expected to surpass $45.1 Billion by 2027, raising by 37.7% (according to a study by CAGR). And this can all start from the simple voice annotation.

The possibility and utility of AI-Powered Virtual Assistants come from both technical and behavioral aspects. In correlation with the ever-growing demand for on-app assistance, we have the data inputs continuously poured into the AI system for data training.

To put it another way, one of the most important features to make AI-powered virtual assistants possible is the data inputs, aka voice annotation.

The booming industry of AI and virtual assistant

For starters, an intelligent virtual assistant (IVA), or we can call it an AI-powered virtual assistant, is a software technology that is developed to provide responses similar to those of a human.

With this assistant, we can ask questions, make arrangements or even demand actual human support.

Why are virtual assistants on the rise?

Intelligent virtual assistants are widely used, mostly for the reduced cost of customer handling. Also, with quick responses for live chat or any other form of customer engagement, IVA helps boost customer service satisfaction and save time.

Besides external performance as above, IVA also collects customer information and analyzes conversation & customer satisfaction survey responses; thereby, helping organizations improve the customer and company communication.

Virtual Assistant and voice annotation

Intelligent virtual assistants can play as the avatars of the enterprises. They can dynamically read, understand and respond to queries from customers, and eventually reduce costs for manpower in different departments.

We can see many of those IVAs in large enterprises as they can help eliminate the infrastructure setup cost. This is why the revenue for IVA are so high in recent years and perhaps in the years coming.

What can virtual assistants do?

The usability and adoption of AI-powered virtual assistance are everywhere. We can see it in our operating systems, mobile applications or even chatbots. With the deployment of machine learning, deep neural networks and other advancements in AI technology, the virtual assistant can easily perform some certain tasks.

|

Virtual assistants are very common in operating systems. These assistants help in setting calendar, making arrangements, setting alarms, asking questions or even writing texts. A multitasking assistant like this is on the large scale, and we might think that these applications are limited within operating systems only.

|

However, with the soaring numbers of mobile users and mobile apps, many entrepreneurs and even start-ups are beginning to implement a virtual assistant just within their product apps. This leads to the rising demand for the data input required in different fields.

For example, a healthcare service app requires specific voice annotations regarding medical terms and other healthcare-related matters.

In the report of ResearchAndMarkets.com concerning Global Intelligent Virtual Assistant (IVA) Market 2019-2025: Industry Size, Share & Trends, it is indicated that:

- Smart speakers are developing with the fastest pace and emerging as the major domain for IVA

- Still, Text to speech is the largest segment in IVA. It is estimated to reach a revenue of over $15.37 Billion by 2025

- The country with the dominance in the market of IVA is North America with the main industry of healthcare.

- The key players are Apple Inc., Oracle Corporation, CSS Corporation, WellTok Inc., CodeBaby Corporation, eGain Corporation, MedRespond, Microsoft, Next IT Corporation, Nuance Communications, Inc., and True Image Interactive Inc.

Through the report, we can see that the potential to develop and grow the AI-powered virtual assistant market is on fast-paced growth. For every different domain, we have a different approach for the implementation of IVA.

For better service and business development, enterprises demand effective customer engagement, hence the growing number of virtual assistants to be implemented in different products.

Currently, the intelligent virtual assistant market is majorly driven by the BFSI industry vertical, owing to its higher adoption and increasing IT investment. However, automotive & healthcare are the most lucrative vertical segments and are likely to maintain this trend during the forecast period.

How can voice annotation help the IVA?

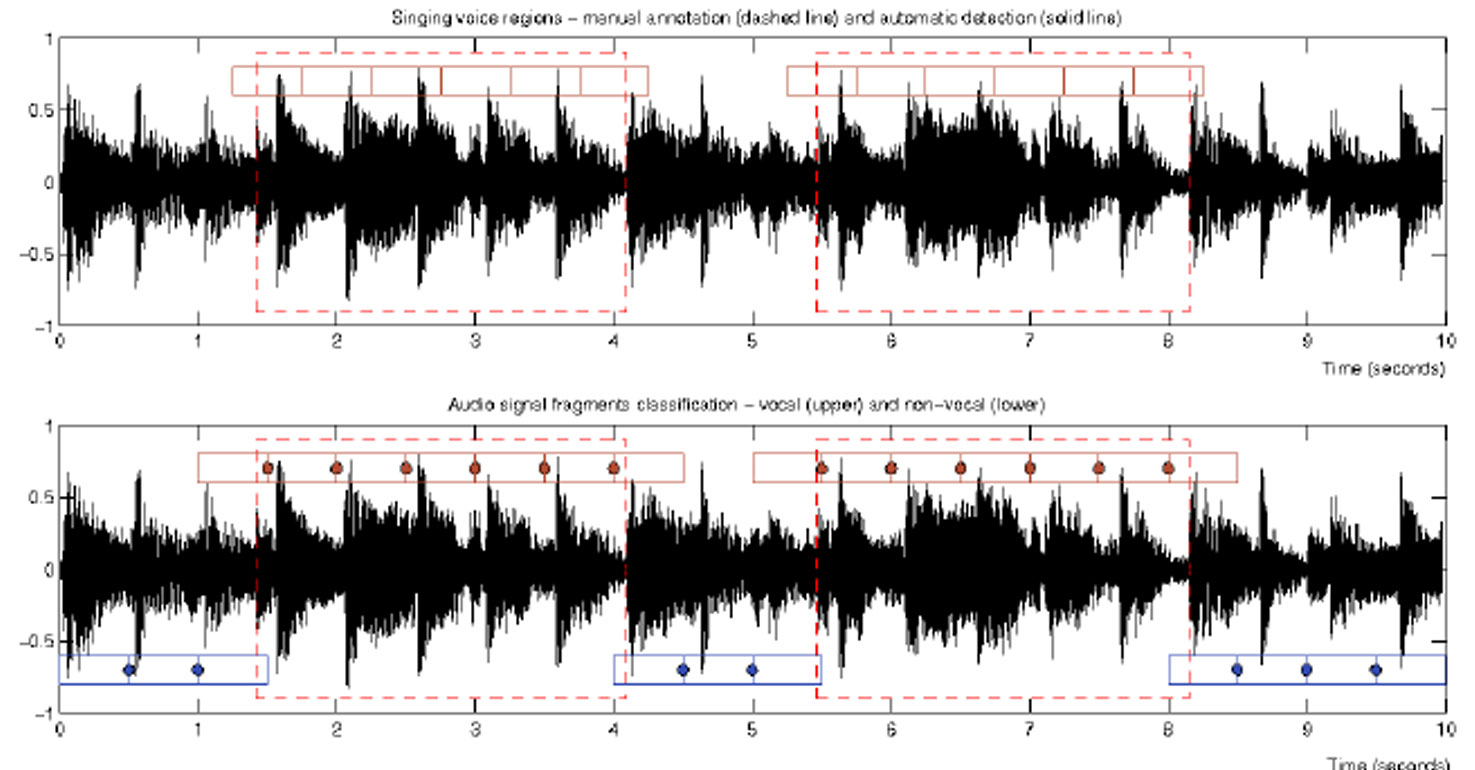

As Virtual Assistant appears in almost every aspect of life, including calling, shopping, music streaming, consulting, etc., the requirement for voice data processing continues to grow. Besides the speech to text and text to speech annotation, more advanced forms of part of speech tagging or phonetics annotation are also in high demand.

Voice Annotation for Virtual Assistant

For a IVA system to operate properly, the developer has to consider different approaches of interaction methods, including:

- Text-to-text: Text-to-text annotation is not necessarily directly related to the operation of IVA. Nevertheless, labeled texts help the machine understand the natural language of humans. If not done properly, the annotated texts can lead a machine to exhibit grammatical errors or wrongly understand the queries from customers.

- Speech-to-text: Speech-to-text annotation transcribes audio files into text, usually in a word processor to enable editing and search. Voice-enabled assistants like Siri, Alexa, or Google Assistant are fine examples for this.

- Text-to-speech: Text-to-speech annotation enables the machine to synthesize natural-sounding speech with a wide range of voice (male, female) and accents (Northern, Middle and Southern accent).

- Speech-to-speech: Speech-to-speech is the most advanced and complicated form of annotation. With the data input of this, the AI can understand the speech of users, and then answer/perform accordingly.

Whichever of the above, we still have to collect data, voices, speeches, conversations, and then annotate them so that machine learning algorithms can understand the input from users.

Voice annotation service requires much effort to deliver understandable and useful datasets. It also takes much time to even recruit and train the annotators, not to mention the on-job time.

If you want to outsource voice annotation, contact LQA now for instant support.

- Website: https://www.lotus-qa.com/

- Tel: (+84) 24-6660-7474

- Fanpage: https://www.facebook.com/LotusQualityAssurance